Four hours of hacking in Tacoma, WA

Foreword

Through four hours of competition across nine categories of technical challenges, from cryptography to network security, students from across Washington were able to demonstrate their knowledge, perseverance, soft and hard skills, by battling against a competition designed to test their limits.

Several months of work by multiple individuals led to this point:

- Dan Wordell in acquisition of hardware

- Stu Steiner in datacenter logistics and network troubleshooting

- Terry Yeigh in ensuring student resources and getting his teams through regionals

- Brandon Hirst, Adam Scroggins, and others in SkillsUSA in helping facilitate the event

And finally myself, in setting up the challenges and judging/scoring the results.

Background – 2019

I had put on a CTF for High School students across Washington in 2019, as part of SkillsUSA’s state-level competition. This was the first year for the competition, and the last year I was involved – COVID threw a wrench in things!

Being my first time running a CTF, it doubled as my own personally frantic hackathon and required a number of considerations:

| Questions | Answers |

| Which CTF platform? | CTFd is a good platform. |

| What types of challenges? | I can have any challenge I can program for. |

| How are challenges scored? | Ansible, in a pinch, can retrieve scorable data. |

| How do I minimize student hardware requirements? | Apacha Guacamole obviates the need for PuTTy or VPN/RDP. |

| Where am I gonna host this? | Amazon works but $$$ |

Takeaways

After conducting these competitions, I had determined several avenues of improvement:

- More challenge types addressing a broader set of skills.

- More automation for competition setup.

- Include some physical challenges.

- Plan out scoring better.

- Find a hosting platform that can be used long-term without costing a fortune.

Planning for 2022

The technical work for 2022’s competition began several months before I was asked to be involved: Dan Wordell, Information Security Officer at the City of Spokane, had managed to acquire a hypervisor platform (Antsle), which found a permanent home at Eastern Washington University’s datacenter with the backing of Stu Steiner, a well-known lecturer on Computer Science at EWU.

Antsle vs Amazon

This Antsle device, a small, low-power server designed to manage containers and virtual machines as an alternative to using Amazon AWS, provided a way for me to develop a technical challenge platform without the ongoing OpEx of Amazon.

After some initial technical challenges with the server itself in September 2021, and then with the networking at EWU through February 2022, I was finally able to use the device as it was intended. But at this point, it was still a blank canvas.

Automation

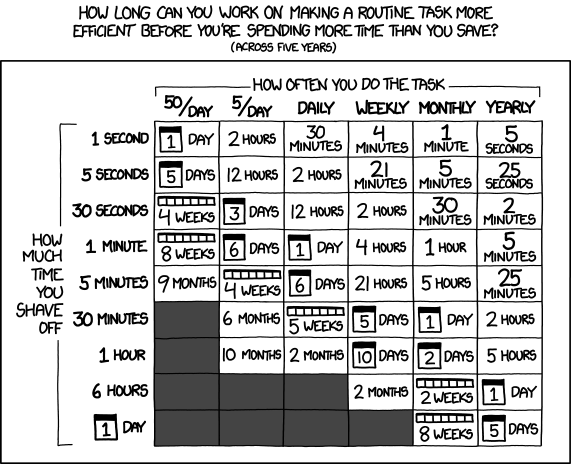

Potentially having more teams and more challenges, automation took priority – with the goal of reusing this infrastructure in the future, for more frequent competitions within Spokane Public Schools and across the state of Washington.

Terraform was an initial idea – I had the vague notion of Terraform as being like ansible but for provisioning – something that could automagically create infrastructure.

But after several hours of initial work, I realized that it really couldn’t do much with Antsle – the provider supports very little of the API.

In the end, as with 2019, my primary automation tool ended up being ansible. Unlike 2019, I used ansible to a much greater degree and for just about everything conceivable – perhaps it’s more accurate to say I abused ansible, given the level of nested loops and delegate_to.

This code will be available on Gitlab for those interested in helping to contribute, though at the moment it is a pile of frantic spaghetti and needs a serious refactor!

“Ansible is an open-source software provisioning, configuration management, and application-deployment tool enabling infrastructure as code”

Building the CTF

Warning: Beyond this point lies a lot of technical jargon.

Skip if uninterested.

With a platform already determined (Antsle, CTFd, Guacamole, ansible, etc), I began work in earnest about halfway through February. My strategy was to try to avoid any form of manual configuration and to rely on ansible as much as possible, even when investing hours into writing custom roles for my specific use-case.

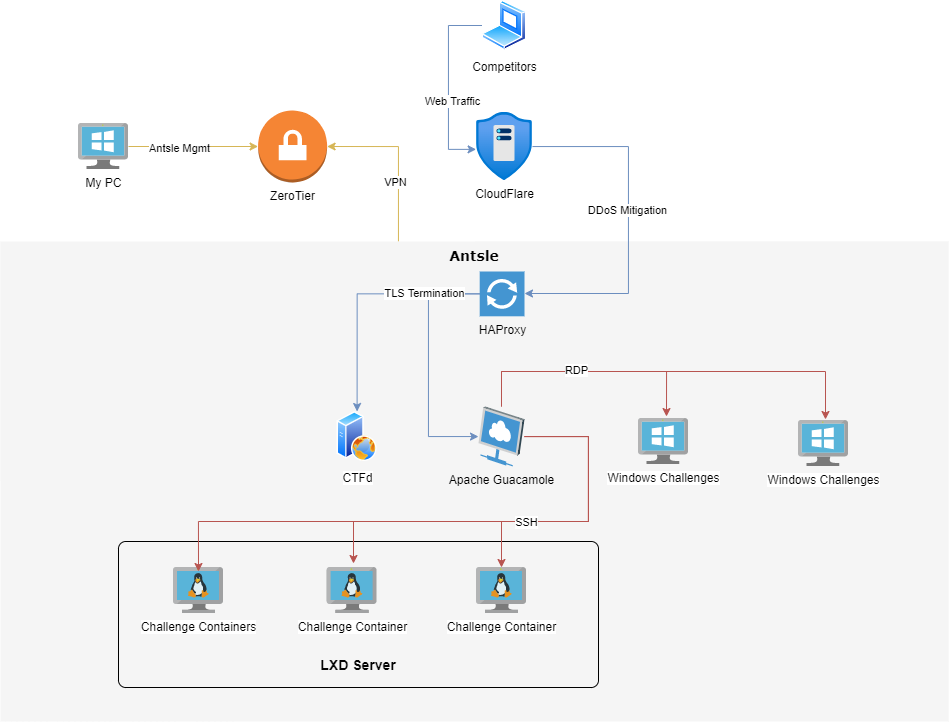

The network topology would involve CloudFlare and HAProxy in front of everything, with CTFd/ansible on one Antsle container, Guacamole on another container, a VM for hosting multiple Linux challenges via LXD, and multiple VMs for a Windows instance for each team (all challenge instances being accessible via Guacamole):

Ansible Setup

Using SSH for the Linux containers and WinRM for the Windows VMs (I setup WinRM on one Windows VM then cloned it within Antsle to save time), I got Ansible running on the CTFd machine. This was to serve two purposes:

- Provision and coordinate configuration changes across all the infrastructure

- Facilitate scoring of interactive technical challenges

Provisioning with Ansible

I had an evolving YAML spec that defined challenge machines and team memberships. Since I added Windows support toward the end, there is some redundancy and inconsistency in how the YAML is defined. The process ended up mostly automated, though CTFd configuration remained mostly manual beyond the initial installation.

challenge_fqdn: "linux.cyberpatriot.ewu.edu"

win_challenge_fqdn: "windows.cyberpatriot.ewu.edu"

managed_dns_group: "{{ groups.main }}"

guacamole_api: https://rdp.wa-cyberhub.org/guacamole

guacamole_admin: ----

guacamole_pass: -----

common_machines:

- name: scantarget

os: ubuntu20.04

net:

int: eth0

address: X.X.X.X

mask: 255.255.255.0

maskbits: 24

gateway: X.X.X.X

dns: X.X.X.X

roles:

- challenge-hiddenweb

windows_machines:

worker:

roles:

- foo

user_machines:

scan:

os: ubuntu20.04

net:

int: eth0

mask: 255.255.255.0

maskbits: 24

gateway: X.X.X.X

dns: X.X.X.X

roles:

- challenge-forensic1

teams:

- name: TeamName

org: Some High School

windows:

- name: worker

fqdn: team1.windows.cyberpatriot.ewu.edu

os: windows

ip: X.X.X.X

users:

- name: user1

password: -----

contact:

name: User One

email: foo@bar.com

- name: user2

password: -----

contact:

name: User Two

email: foo@bar.com

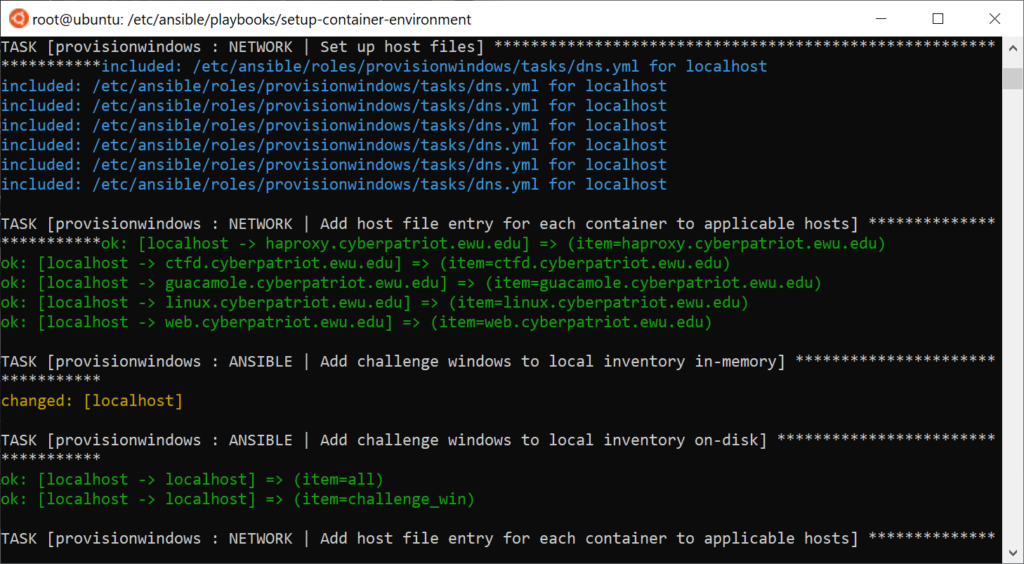

machines:

- name: scan

address: X.X.X.XWith the above YAML spec, my playbook/roles then took care of provisioning all the challenge containers, configured authentication across containers and Windows VMs, applied ansible challenge roles, configured Guacamole connections/users, configured HAProxy front/backends, requested certificates from LetsEncrypt, and maintained host file changes across all of the machines, modifying the Ansible inventory on disk and while running.

In theory, I could add new Linux challenges very easily

- Create roles in ansible for the challenge (deploy backdoor, etc),

- Define the team machine template under user_machines and assign ansible challenge roles,

- Assign that machine template to each team with an IP address,

- Run the playbook,

- ???

- Ansible does the rest:

- New containers are provisioned and bootstrapped,

- Host file entry for container is added,

- Ansible inventory updated with the new container in the right groups,

- Team member accounts are created on their team’s containers,

- Guacamole connection is added for each new container, for each applicable team member,

- Guacamole user profile is created (if needed) and the new connections are attached to the respective user profile,

- Some other stuff.

A big challenge here involved a lot of hacky use of “lxc exec” to pseudo-delegate bootstrapping commands to the containers, which booted without DHCP due to being directly bridged to the host network. Also, there is a very unintuitive way that you have to combine loops with delegation in ansible.

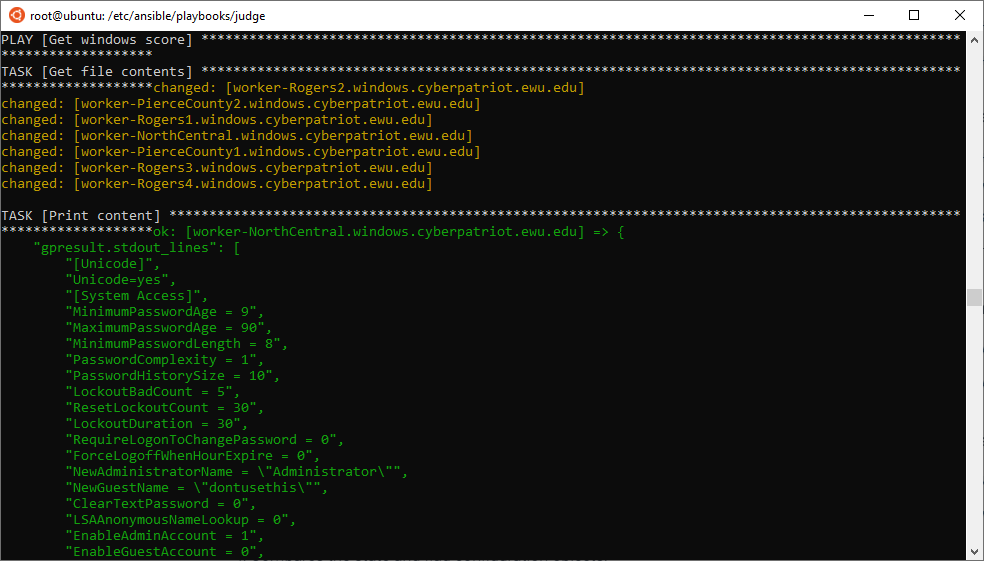

Scoring with Ansible

In 2019, I had also used ansible to score Windows challenge:

- Ansible playbook generates/runs a scheduled task on a given system:

- Scheduled task runs gpresult and outputs xml,

- Ansible returns xml via stdout.

- Custom CTFd challenge plugin:

- Calls ansible-playbook,

- Parses xml output,

- Returns JSON of key->value pairs,

- Compares it to JSON flag.

This time, rather than setting up two machines per team (one domain controller and one domain member), I did single machines and relied on secedit. This had its drawbacks but was simpler in the end:

- Ansible playbook generates/schedules/runs a scheduled task on a given system:

- The task exports Secedit to a .ini file,

- Secedit ini is returned via stdout.

- Custom CTFd plugin runs the playbook:

- Uses environmental variables to:

- Make Ansible return JSON-formatted output rather than a steam of cowsays,

- Limit execution to single host defined in CLI argument.

- Flexibly parses INI output to a Python configuration object,

- Flexible parses flag (e.g., JSON) to a comparable Python configuration object,

- Compares the state and flag configuration objects.

- Uses environmental variables to:

CTFd Setup

While CTFd installation was automated through ansible, within CTFd I had to do a number of tasks:

- Set up users and their passwords

- Configure teams

- Create custom plugins for challenges

- Create challenges/flags

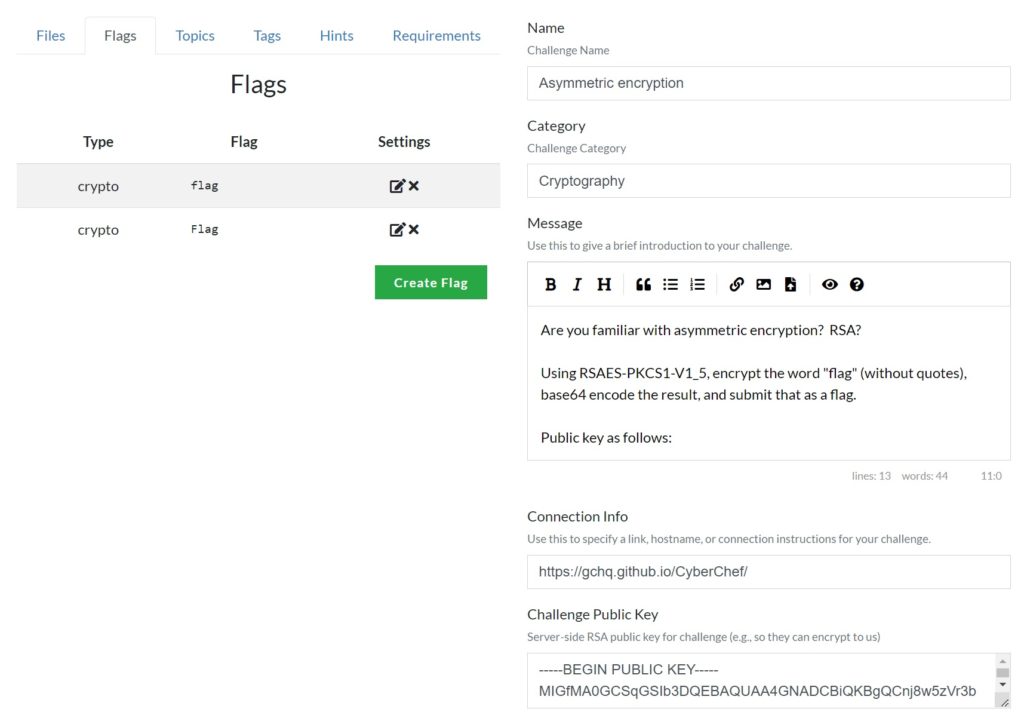

The custom challenge plugins were expanded vs 2019, and this time included custom flags as well:

- Crypto Challenge

- Creating/using different forms of cryptography.

- Crypto Flag

- Scoring RSA encryption/decryption, verifying against previously submit public keys.

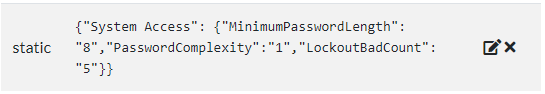

- Secpol Challenge

- Score correct security settings on Windows.

- SSL Challenge

- Test TLS server parameters.

I have very little experience with Flask or SqlAlchemy so the learning curve was pretty steep. I also kept running into issues with the way things were cached, constantly needing to rename classes and folders after each iteration required changes to DB schemas or static assets. Clearly I didn’t do my homework.

Designing the Challenges

Reducing the focus on forensic questions took priority. These types of questions are often over-represented on CTFs, since they are simplest to implement (find the flag!), but they also skew the skillsets being tested.

Laptops would be required for each student, and as I was able to get assurance from the advisors that students would at least have Wireshark and PuTTy, my options wouldn’t be purely limited to what was accessible via Guacamole.

My approach was to consult the SkillsUSA guidelines for the competition, and base challenges on that. Since the time was limited, I tried to select a sample of as many areas as possible. For example, here are several types of challenges that I implemented:

CSC 1 – Professional Activities

For this first category, no infrastructure was necessary at all!

I decided on a Technical Interview portion, which consisted of:

- Each contestant introduces themselves as if they were applying to an entry-level cyber security job.

- One contestant rolls two dice – yellow one for even/odd and white for a number.

- A random technical question is selected from the front (even on the yellow die) or back (odds on yellow) out of six options (white die).

- The team is given a minute to coordinate a response.

- Both team members give a collaborative answer to the question.

They were then evaluated on the following criteria:

- Presentation (10) – Dress, posture/eye contact, professionality.

- Understandability (10) – How well do they explain themselves, can they explain the concepts comfortably.

- Accuracy (20) – Technical accuracy of their answers, depth of knowledge.

In the future, I’ll add an option to re-roll for a different question.

CSC 2 – Endpoint Security

Contestants will display knowledge of industry standard processes and procedures for hardening an endpoint or stand-alone computing device.

Cyber Security Standards, SkillsUSA 2022

Using the secedit challenge plugin, I was able to create a series of challenges where contestants interpreted a business problem and then implemented a solution by modifying the local security policy.

I realized, with too little time to spare, that I should have added a way to compare other than “=”. Why fail a password complexity question when they set minimum length to 9 instead of 8?

CSC 3,4,6 – Switch/Router/Boundary Security

Logistically speaking, this was one of the more difficult items. Initially I had a grand plan of some kind of multi-segment Docker network for each team filled with VyOs containers, but eventually I settled on a technically simpler plan: physical Cisco ASA 5500s and a raspberry pi.

My problem here was threefold:

- I only had two spare ASAs – but there were seven registered teams.

- I didn’t have any managed switches or regular routers.

- I need to fit this all in the trunk of my already-cluttered Toyota Corolla.

I addressed the second point by crafting the challenge in such a way that some knowledge of switching, routing, and firewalling was necessary to complete the challenge – these devices already fill multiple roles anyway.

The fist point was addressed by setting a time-limit: if both are already in use, the first team gets booted after an hour from their start, or 20 minutes from that moment, whichever is later.

Gathering together a handful of serial adapters, power strips, power cables, spare laptops (a 2009 Macbook running Xubuntu!), and the Cisco ASAs, I just managed to fit everything in my trunk alongside my suitcase, laptop/binder bag, and emergency supplies.

With a handful of serial adapters and a few spare laptops in case of driver issues on the school-issued laptops, four teams tried their luck at applying knowledges of networking across multiple OSI layers to get a flag located on the raspberry pi.

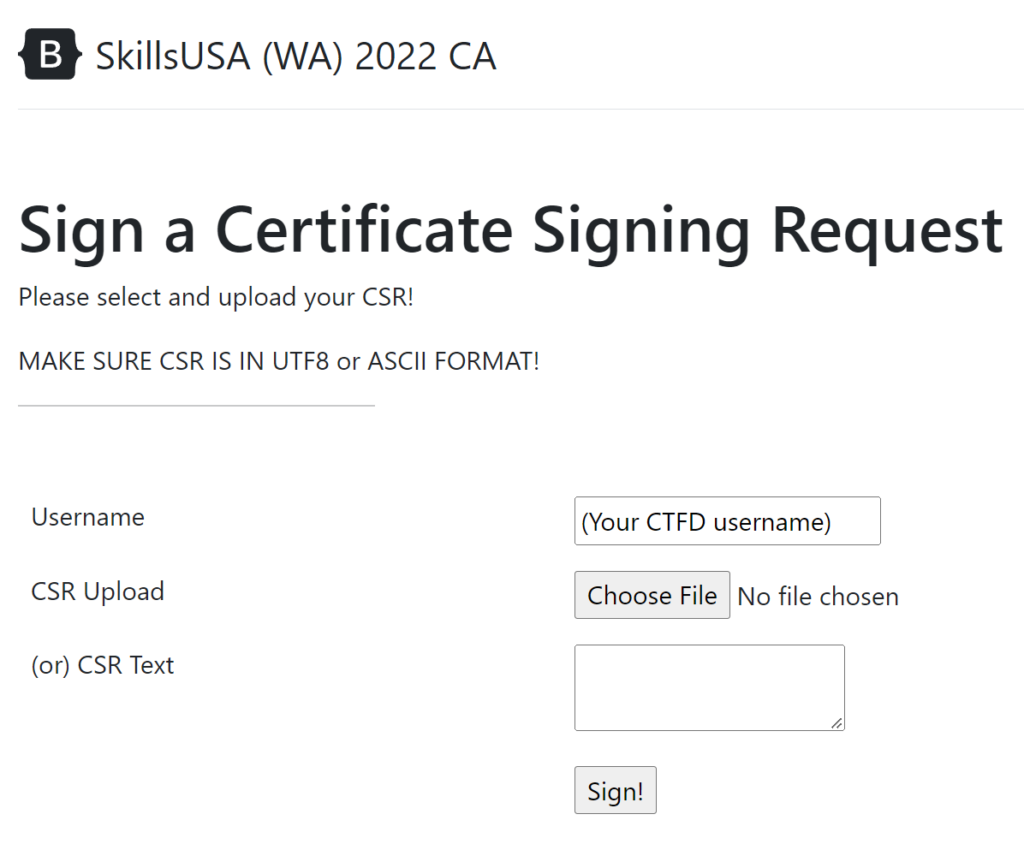

CSC 5 – Server Hardening

This task contains activities related to hardening servers against attack.

Cyber Security Standards, SkillsUSA 2022

To my mind, ‘server hardening’ either addresses hardening the operating system environment or hardening applications. With that in mind, I proposed two challenges: one involved setting up a Domain Controller with a security-related GPO, which will improve the security of every operating system on the network, and the other involved the correct TLS configuration of a webserver using an internal Certificate Authority.

The Domain Controller challenge was, due to a shortage of time, manually scored. The Certificate Authority challenge required:

- Set up a certificate authority cert/key and config in openssl.

- Create a basic web app in PHP which could sign certificate signing requests and return the resulting certificate.

- Create a custom CTFd plugin to test TLS validity of the website.

In the end, rather than securing the internal CA against attack, I reused “intentionally” vulnerable code for a separate pentesting challenge.

CSC 8 – Network Forensics

This task contains activities related to network forensic activities associated with Incident Response Actions. Contestants will use appropriate measures to collect information from a variety of sources to identify, analyze and report cyber events that occur

Cyber Security Standards, SkillsUSA 2022

Working in network security myself, this has always been one of my favorite areas. I tried to restrain myself, but a number of challenges remained focusing on forensics:

- Wireshark challenges – I captured traffic on my own machine while using several plaintext protocols, and crafted scenarios to answer based on those protocols

- Linux challenges – Reverse shell detection and analysis

- Windows challenges – Bot detection and joining the C&C IRC to find the botmaster name

- Digital Forensics – Analysis of artifacts from a breach (deobfuscation and interpretation of backdoor shells) as well as web log analysis

Aside from the initial setup, these were pretty easy to score since it simply involved putting the flag values into CTFd.

CSC 9 – Pentesting

This task contains activities related to the process of penetration testing…Hack a specified file (flag) in a remote network.

Cyber Security Standards, SkillsUSA 2022

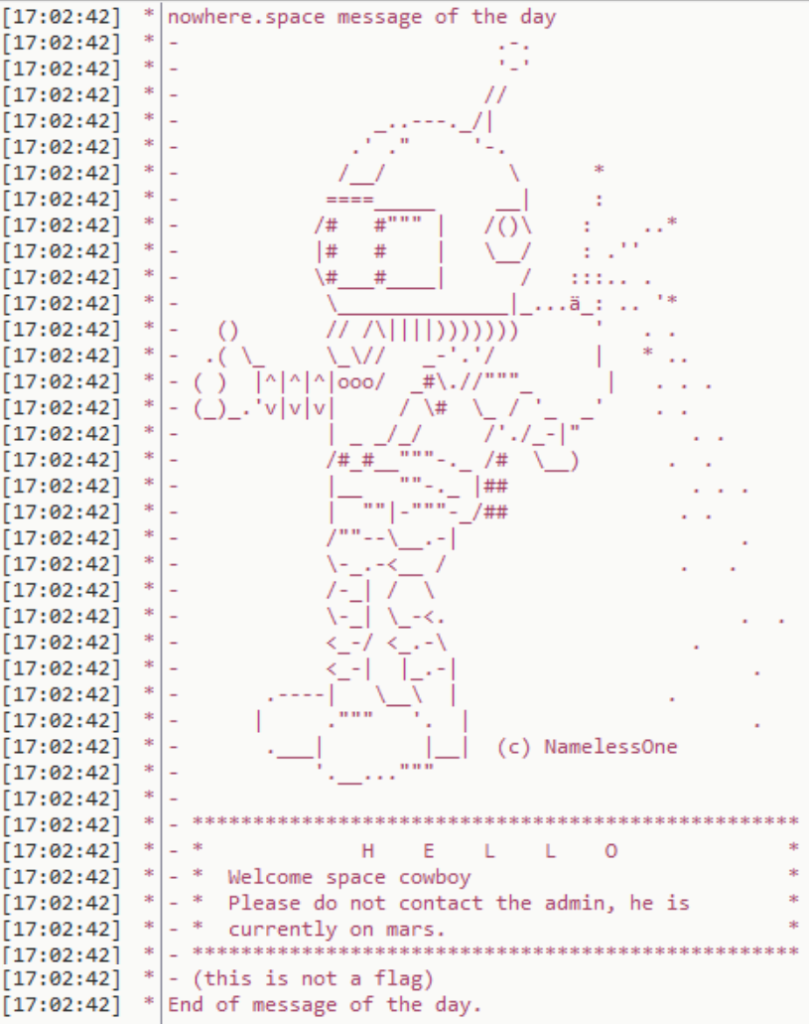

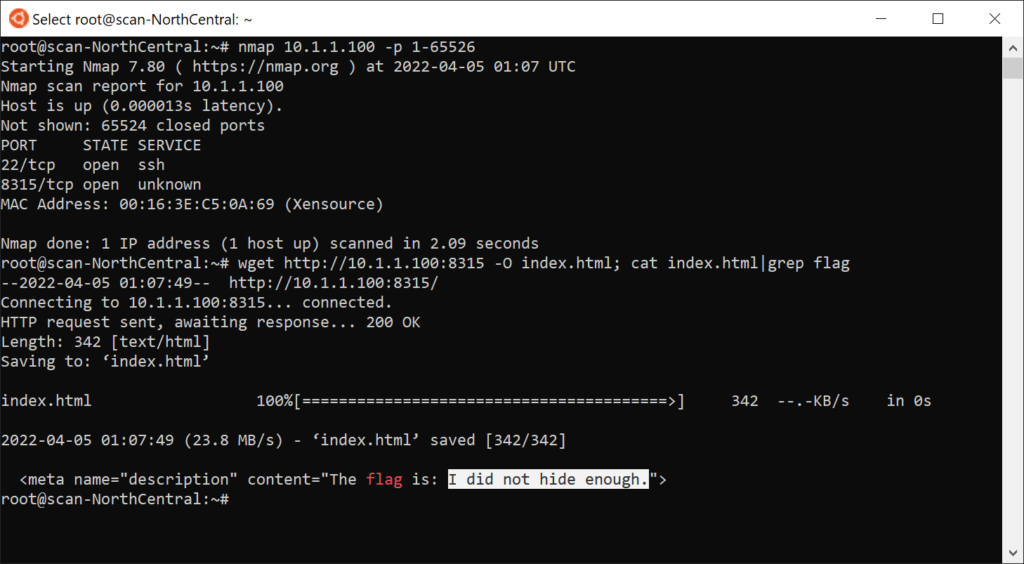

Always one of the more fun contests – since it’s something that would otherwise potentially be illegal! Two types of pentest challenges were available: One involving port scanning and one involving abusing input validation.

The nmap challenge sounds simple, though it requires several skills: knowing how to install applications in linux, knowing how to use and interpret nmap, knowing what command line tools can request a webpage, and then reading the source code of the website enough to find the flag.

Omissions

The astute readers among you may realize I missed CSC 7 – which was the wireless challenge. I did not have the resources to do a wireless challenge this time, though I tossed around a few ideas with varying levels of feasibility:

- Locate a hidden Access Point in the building using a wardriving app?

- Not everyone has a laptop with capable hardware; smartphone apps might be a workaround.

- Bypass MAC filtering on an otherwise open AP?

- Again, depends on laptop/wifi card hardware.

- Configure a secure access point with a hidden SSID and connect to it?

- Requires some additional hardware in my trunk, difficult to automate scoring.

Additionally, I had to consolidate all of the networking sections into one challenge, which is less than ideal.

Judging and Scoring a CTF

Beyond the technical challenges involved in provisioning this infrastructure and setting up challenges, a surprising amount of time ended up going into paperwork: a scoring rubrick, handouts, lists of questions, etc.

Scoring Rubric

I had initially developed guidelines on scoring without any official instructions – grading resumes and technical interviews on several criteria, written tests (Ethical Hacker), CTF/technical challenges, all weighted to a percentage. Individual challenges within the CTF had largely arbitrary scores across 11+ categories, set by difficulty (such that some categories were worth much more than others).

At the last minute, I received the official scoring guide, and with about an hour to spare I worked on re-valuing and re-categorizing all my technical challenges such that I had nine categories of technical challenges each worth 100 points.

After the competition, I realized that the total points had to add up to 1000, but with the technical interview and two written tests I had 1200. So I just multiplied everything by 83% across the board and gave a few extra percent to the technical interview to get a nice round 1000.

Running and Judging the CTF

One eye-opening thing, also last minute, was that two students missed competition and so their respective teams were being consolidated into one: this should have been a perfect use-case for ansible, and I might have been able to address that problem very quickly with a simple change to the YAML file.

However, I ended up doing it manually – I didn’t trust the automation, as least not with only a few minutes to spare. In retrospect, it would have been a good idea to destroy and rebuild the whole automated infrastructure several times during development.

Beyond that, there were several minor technical problems (e.g., typo in a flag), and one of the spare laptops I brought ended up getting used. As with last year, in retrospect I should have given out more free hints.

Game Day

Preparation began at about six in the morning, with several trips down the escalator (and a flight of stairs) to bring the ASAs, a switch, laptops, boxes of cords, my own laptop, a binder of handouts, and several power strips into the competition area.

After setting everything up, I had to scramble and re-value/categorize the challenges, altering some systems for the team changes I mentioned earlier – but soon enough the competition was well under way.

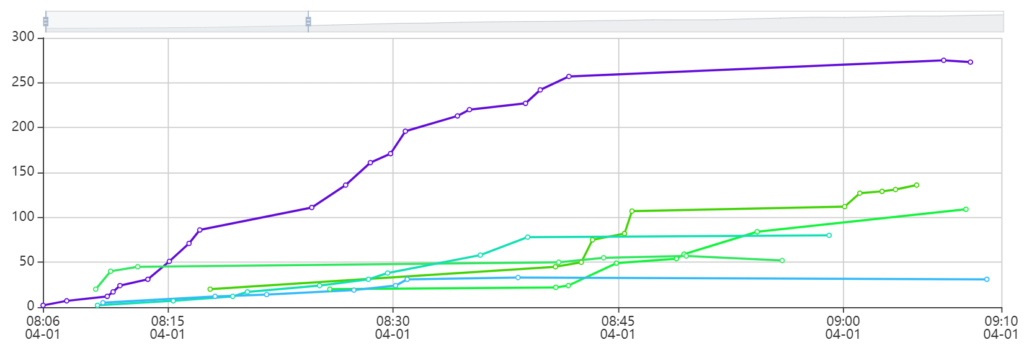

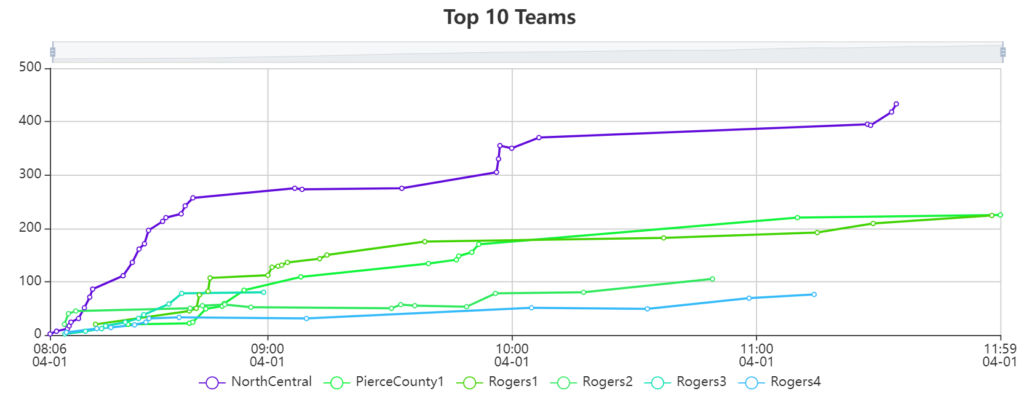

Teams earned more points initially, so I succeeded in adding entry-level challenges. More intermediate challenges (additional challenges within Endpoint Protection and other more accessible categories) should be added in the future to keep all teams engaged rather than frustrated at a single challenge for two hours.

At the half-way mark, I gave a reminder about the Cisco challenge – four teams scrambled to take a whack at it, one with 15 minutes on the clock.

None of the teams successfully completed the Cisco challenge, but I ended up giving some points for the attempt (at least they got logged in and started configuring it, having never touched PuTTy or a serial cable in their lives.)

Final Scores

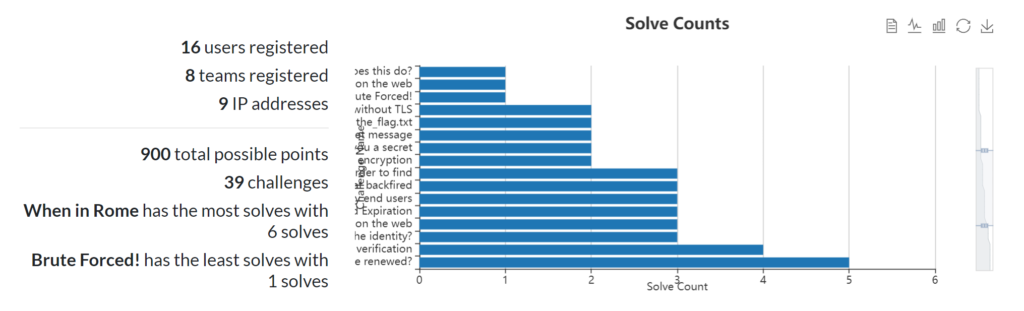

At the end of the competition, one team was firmly in the lead while the next two teams were separated by a single point!

I wouldn’t know the final scores until the next day, since I didn’t proctor the written tests, but the awards ceremony revealed that the final scores were in the same order as the CTF scores.

Future Work

While I believe 2022 was tremendously more successful than 2019, I have a list of items to improve upon, in something of an ascending rank of difficulty:

- Refactor scoring rubric to reflect official guidelines (not an hour before competition.)

- Improve technical interview portion.

- Add more technical interview questions (20-sided die?)

- Allow limited re-rolls.

- Add more intermediate challenges.

- More server and endpoint hardening.

- Add some type of wifi challenges.

- Refactor all the ansible code so it’s less of a jumble.

- More networking challenges – maybe the VyOs docker lab idea will see daylight?

- Packet tracer license?

- GNS3 emulation of Cisco gear sounds nice but legally is sketchy.

- Make a more formal system for managing CTFd and Ansible together.

- Ansible Tower?

- CTFd plugin?

1 Comment

Expanding the SkillsUSA CTF - Washington Cyberhub · May 4, 2023 at 10:27 pm

[…] was the 3rd competition I ran for SkillsUSA at the state level, and I had just run a similar competition at the regional level, so at this point I’m […]